Generative AI technologies rely on content from knowledge communities as their training data. However, these communities receive little in return and instead experience increasing moderation burdens imposed by an influx of AI-generated content. Moreover, as platform operators sell their content to AI developers whose products may substitute for their work, these communities see a decrease in web traffic and new content and struggle with maintaining the vibrancy of their knowledge repositories. According to The Pragmatic Engineer, a prominent technology newsletter covering software engineering, the traffic on Stack Overflow declined dramatically to the point that the platform now generates roughly the same amount of new content as it did when it first launched in 2008, mostly driven by the impact of generative AI.

Even before AI technologies posed new threats, relationships between online communities and their host platforms were often uneasy. Past research on platforms such as Reddit, Stack Exchange, Tumblr, and DeviantArt reveals a recurring pattern: when platform policies conflict with community values, communities tend to push back. Community members have organized blackouts, suspended moderation, or migrated to alternative platforms altogether. However, less understood is how these conflicts unfold over time, especially in the context of generative AI. So how do knowledge contributors resist AI-related policies that conflict with their values? And what happens in the aftermath of such collective action, especially for a community’s governance, including how rules are set, whose voices are recognized, and how participation is enabled?

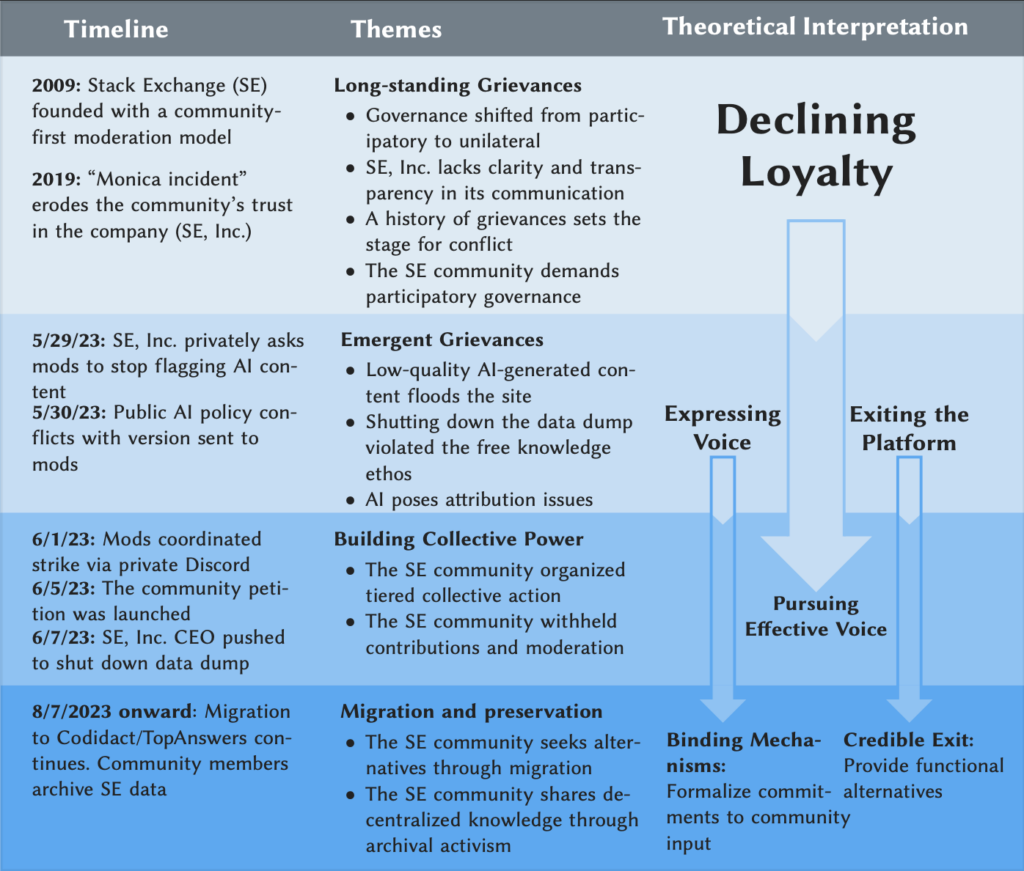

To answer these questions, we examined a major conflict between SE, Inc. and the community that occurred in 2023 around an emergency arising from the release of LLMs. Drawing on a qualitative analysis of over 2,000 messages posted on Meta Stack Exchange (the Stack Exchange site designated for policy discussions), as well as interviews with 14 community members, we traced how this conflict emerged, escalated, and evolved. What we found was not a sudden backlash driven solely by AI, but the accumulation of long-standing grievances.

According to our interviews, SE community members described years of frustration over declining transparency, accountability, and participatory governance. Although the platform historically supported community self-regulation through mechanisms such as moderator elections and shared moderation responsibilities for users with high reputation, community members increasingly perceived that key decisions were being made by SE, Inc. without meaningful community input. Tensions escalated when SE, Inc. introduced policies related to AI-generated content without consulting moderators or contributors, which many interpreted as a long-standing exclusion and disregard. In response, moderators and contributors coordinated collective action by suspending moderation activity, signing public petitions, and updating discussions on Meta Stack Exchange. Some also chose to exit the platform, migrating to alternative spaces such as Codidact, which is an open-source, community-governed platform. The collective action was organized through a tiered communication structure, beginning with a small, enclosed group of moderators and then spreading across the network’s users.

We interpret findings through the lens of Albert O. Hirschman’s Exit, Voice, Loyalty framework. According to Hirschman, members of an organization face two options to express their dissatisfaction when loyalty towards the organization decreases: one is exit, and the other is voice. In the Stack Exchange case, loyalty had already degraded due to the accumulation of unresolved grievances rather than a single triggering event. As community members came to believe that their voices were no longer heard, dissatisfaction manifested in two distinct responses: coordinated collective voice through organized resistance, and exit through permanent disengagement from the platform. This pattern highlights how governance crises can emerge even in platforms that formally support community self-regulation, and how declining loyalty can transform routine disagreement into large-scale collective action or exit.

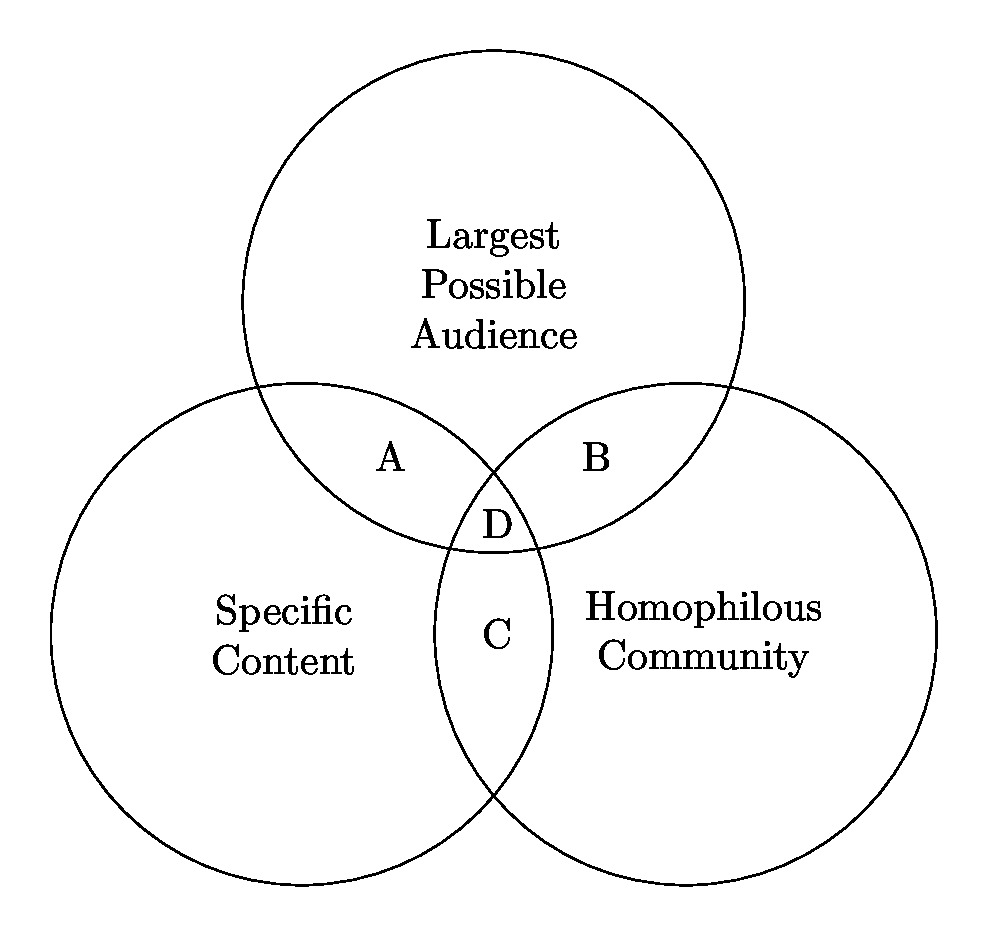

In retrospect, the Stack Exchange strike highlights a broader lesson: community grievances around AI are not just about technical issues, but about deeper governance issues about relationships between platforms and the communities that sustain them. Thus, managing these crises requires more than better moderation tools or more transparent AI policies. Platforms and big tech companies need to support participatory governance in a more systematic way. For example, creating mechanisms for effective voice by binding platforms into an agreement where community input can help shape decision-making processes. Another possible solution would be credible exit, where contributors have alternatives if governance on the original platforms fails. When communities can leave without their data being locked in, platforms are more likely to listen. Credible exit not only empowers the communities, but also reduces long-term governance risks for platform operators. Conflict is expensive for platforms, and maintaining loyalty requires long-term investment in moderation, communication, and policy enforcement. Conversely, the exit process can function as a self-binding mechanism that mediates platform behavior and mitigates costly disputes when users have functional alternatives. And when platforms bind themselves to community accountability, conflicts are less likely to escalate into strikes in the first place.

In conclusion, the SE moderation strike was not a sudden backlash driven solely by AI, but the accumulation of long-standing grievances. As generative AI continues to reshape the internet, the future of knowledge production will depend not only on what AI can generate, but also on whether volunteer contributors who built our shared knowledge commons are given the right to decide what comes next. We need to institutionalize participatory governance with binding mechanisms and create more credible exit options for communities to sustain this future.