This post was co-authored by Benjamin Mako Hill and Aaron Shaw. We wrote it following a conversation with the CSCW 2018 papers chairs. At their encouragement, we put together this proposal that we plan to bring to the CSCW town hall meeting. Thanks to Karrie Karahalios, Airi Lampinen, Geraldine Fitzpatrick, and Andrés Monroy-Hernández for engaging in the conversation with us and for facilitating the participation of the CSCW community.

False discovery in empirical research

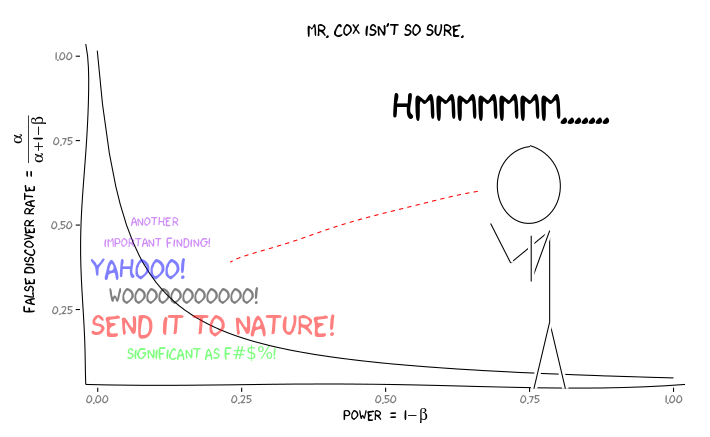

There is growing evidence that an enormous portion of published quantitative research is wrong. In fields where recognition of “false discovery” has prompted systematic re-examinations of published findings, it has led to a replication crisis. For example, a systematic attempt to reproduce influential results in social psychology failed to replicate a majority of them. Another attempt focused on social research in top general science journals and failed to replicate more than a third and found that the size of effects were, on average, overstated by a factor of two.

Quantitative methodologists argue that the high rates of false discovery are, among other reasons, a function of common research practices carried out in good faith. Such practices include accidental or intentional p-hacking where researchers try variations of their analysis until they find significant results; a garden of forking paths where researcher decisions lead to a vast understatement of the number of true “researcher degrees of freedom” in their research designs; the file-drawer problem which leads only statistically significant results to be published; and underpowered studies, which make it so that only overstated effect sizes can be detected.

To the degree that much of CSCW and HCI use the same research methods and approaches as these other social scientific fields, there is every reason to believe that these issues extend to social computing research. Of course, given that replication is exceedingly rare in HCI, HCI researchers will rarely even find out that a result is wrong.

To date, no comprehensive set of solutions to these issues exists. However, scholarly communities can take steps to reduce the threat of false discovery. One set of approaches to doing so involves the introduction of changes to the way quantitative studies are planned, executed, and reviewed. We want to encourage the CSCW community to consider supporting some of these practices.

Among the approaches developed and adopted in other research communities, several involve breaking up research into two distinct stages: a first stage in which research designs are planned, articulated, and recorded; and a second stage in which results are computed following the procedures in the recorded design (documenting any changes). This stage-based process ensures that designs cannot shift in ways that shape findings without some clear acknowledgement that such a shift has occurred. When changes happen, adjustments can sometimes be made in the computation of statistical tests. Readers and reviewers of the work can also have greater awareness of the degree to which the statistical tests accurately reflect the analysis procedures or not and adjust their confidence in the findings accordingly.

Versions of these stage-based research designs were first developed in biomedical randomized controlled trials (RCTs) and are extremely widespread in that domain. For example, pre-registration of research designs is now mandatory for NIH funded RCTs and several journals are reviewing and accepting or rejecting studies based on pre-registered designs before results are known.

A proposal for CSCW

In order to address the challenges posed by false discovery, CSCW could adopt a variety of approaches from other fields that have already begun to do so. These approaches entail more or less radical shifts to the ways in which CSCW research gets done, reviewed, and published.

As a starting point, we want to initiate discussion around one specific proposal that could be suitable for a number of social computing studies and would require relatively little in the way of changes to the research and reviewing processes used in our community.

Drawing from a series of methodological pieces in the social sciences ([1], [2], [3]), we propose a method based on split-sample designs that would be entirely optional for CSCW authors at the time of submission.

Essentially, authors who chose to do so could submit papers which were written—and which will be reviewed and revised—based on one portion of their dataset with the understanding that the paper would be published using identical analytic methods also applied to a second, previously un-analyzed portion of the dataset. Authors submitting under this framework would choose to have their papers reviewed, revised and resubmitted, and accepted or rejected based on the quality of the research questions, framing, design, execution, and significance of the study overall. The decision would not be based on the statistical significance of final analysis results.

The idea follows from the statistical technique of “cross validation,” in which an analysis is developed on one subset of data (usually called the “training set”) and then replicated on at least one other subset (the “test set”).

To conduct a project using this basic approach, a researcher would:

- Randomly partition their full dataset into two (or more) pieces.

- Design, refine, and complete their analysis using only one piece identified as the training sample.

- Undergo the CSCW review process using the results from this analysis of the training sample.

- If the submission receives a decision of “Revise and Resubmit,” authors would then make changes to the analysis of the training sample as requested by ACs and reviewers in the current normal way.

- If the paper is accepted for publication, the authors would then (and only then) run the final version of the analysis using another piece of their data identified as the test sample and publish those results in the paper.

- We expect that authors would also publish the training set results used during review in the online supplement to their paper uploaded to the ACM Digital Library.

- Like any other part part of a paper’s methodology, the split sample procedure would be documented in appropriate parts of the paper.

We are unaware of prior work in social computing that has applied this process. Researchers in data mining, machine learning, and related fields of computer science use cross-validation all the time, they do so differently in order to solve distinct problems (typically related to model overfitting).

The main benefits of this approach (discussed in much more depth in the references at the beginning of this section) would be:

- Heightened reliability and reproducibility of the analysis.

- Reduced risk that findings reflect spurious relationships, p-hacking, researcher or reviewer degrees of freedom, or other pitfalls of statistical inference common in the analysis of behavioral data—i.e., protection against false discovery.

- A procedural guarantee that the results do not determine the publication (or not) of the work—i.e., protection against publication bias.

The most salient risk from the approach is that results might change when authors run the final analysis on the test set. In the absence of p-hacking and similar issues, such changes will usually be small and will mostly impact the magnitude of effects estimates and their associated standard errors. However, some changes might be more dramatic. Dealing with changes of this sort would be harder for authors and reviewers and would potentially involve something along the lines of the shepherding that some papers receive now.

Let’s talk it over!

This blog post is meant to spark a wider discussion. We hope this can happen during CSCW this year and beyond. We believe the procedure we have proposed would enhance the reliability of our work and is workable in CSCW because it involves narrow changes to the way that quantitative CSCW research and reviewing is usually conducted. We also believe this procedure would serve the long term interests of the HCI and social computing research community. CSCW is a leader in building better models of scientific publishing within HCI through the R&R process, eliminated page limits, the move to PACM, and more. We would like to extend this spirit to issues of reproducibility and publication bias. We are eager to discuss our proposal and welcome suggestions for changes.

| [1] | Michael L Anderson and Jeremy Magruder. Split-sample strategies for avoiding false discoveries. Technical report, National Bureau of Economic Research, 2017. https://www.nber.org/papers/w23544 |

| [2] | Susan Athey and Guido Imbens. Recursive partitioning for heterogeneous causal effects. Proceedings of the National Academy of Sciences, 113(27):7353–7360, 2016. https://doi.org/10.1073/pnas.1510489113 |

| [3] | Marcel Fafchamps and Julien Labonne. Using split samples to improve inference on causal effects. Political Analysis, 25(4):465–482, 2017. https://doi.org/10.1017/pan.2017.22 |

Discover more from Community Data Science Collective

Subscribe to get the latest posts sent to your email.