One of the fun things about being in a large lab is getting to celebrate everyone’s accomplishments, wins, and the good stuff that happens. Here is a brief-ish overview of some real successes from 2022.

Graduations and New Positions

Our lab gained SIX new grad student members, Kevin Ackermann, Yibin Fang, Ellie Ross, Dyuti Jha, Hazel Chu, and Ryan Funkhouser. Kevin is a first year graduate student at Northwestern and Yibin and Ellie are first year students at University of Washington. Dyuti, Hazel, and Ryan joined us via Purdue and become Jeremy Foote’s first ever advisees. We had quite a number of undergraduate RAs. We also gained Divya Sikka from Interlake High School.

Nick Vincent became Dr. Nick Vincent, Ph.D (Northwestern). He will do a postdoc at the University of California Davis and University of Washington. Molly de Blanc earned their master’s degree (New York University). Dr. Nate TeBlunthius joined the University of Michigan as a post-doc, working with Professor Ceren Budak.

Kaylea Champion and Regina Cheng had their dissertation proposals approved and Floor Fiers finished their qualifying exams and is now a Ph.D. candidate. Carl Colglaizer finished his coursework.

Aaron Shaw started an appointment as the Scholar-in-Residence for King County, Washington, as well as Visiting Professor in the Department of Communication at the University of Washington.

Teaching

As faculty, it is expected that Jeremy Foote, Mako Hill, Sneha Narayan, and Aaron Shaw taught classes. As a class teaching assistant, Kaylea won an Outstanding Teaching Award! Floor also taught a public speaking class. CDSC members were also teaching assistants, led workshops, and gave guest lectures in classes.

Presentations

This list is far from complete, including some highlights!

Carl presented at ICA alongside Nicholas Diakopoulos, “Predictive Models in News Coverage of the COVID-19 Pandemic in the United States.”

Floor was present at the Easter Sociological Society (ESS), AoIR (Association of Internet Researchers), and ICA. They won a top paper award at National Communication Association (NCA): Walter, N., Suresh, S., Brooks, J. J., Saucier, C., Fiers, F., & Holbert, R. L. (2022, November). The Chaffee Principle: The Most Likely Effect of Communication…Is Further Communication. National Communication Association (NCA) National Convention, New Orleans, LA.

Kaylea had a whopping two papers at ICA, a keynote at the IEEE Symposium on Digital Privacy and Social Media, and presentations at CSCW Doctoral Consortium, a CSCW workshop, and the DUB Doctoral Consortium. She also participated in Aaron Swartz Day, SeaGL, CHAOSSCon, MozFest, and an event at UMASS Boston.

Molly also participated in Aaron Swartz Day, and a workshop at CSCW on volunteer labor and data.

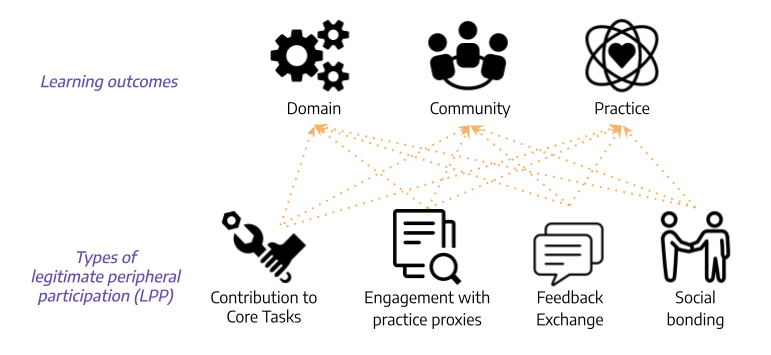

Regina gave presentations at the Makecode Team at Microsoft Research, Expertise@scale Salon (Emory University), Microsoft Research HCI Seminar, and CSCW (“Many Destinations, Many Pathways: A Quantitative Analysis of Legitimate Peripheral Participation in Scratch. 2022” and “Feedback Exchange and Online Affinity: A Case Study of Online Fanfiction Writers“) (among others). She attended CHI and NAACL (with two additional papers). Regina’s paper with Syamindu Dasgupta and Mako HIll at CHI 2022 (“How Interest-Driven Content Creation Shapes Opportunities for Informal Learning in Scratch: A Case Study on Novices’ Use of Data Structures“) won Best Paper Honorable Mention Award.

Sohyeon was at GLF as a knowledge steward and presented two posters at the HCI+D Lambert Conference (one with Emily Zou and one with Charlie Kiene, Serene Ong, and Aaron). She also presented at ICWSM, had posters at ICSSI and IC2S2, and organized a workshop at CSCW. In addition to more traditional academic presentations, Sohyeon was on a fireside chat panel hosted by d/arc server, guest lectured at the University of Washington and Northwestern, and met with Discord moderators to talk about heterogeneity in online governance. Sohyeon also won the Half-Bake Off at the CDSC fall retreat.

Public Scholarship

We did a lot of public scholarship this year! Among presentations, leading workshops, and organizing public facing events, CDSC also ran the Science of Community Dialogue Series. Presenters from within CDSC include Jeremy Foote, Sohyeon Hwang, Nate TeBlunthius, Charlie Kiene, Kaylea Champion, Regina Cheng, and Nick Vincent. Guest speakers included Dr. Shruti Sannon, Dr. Denae Ford, and Dr. Amy X. Zhang. To attend future Dialogues, sign up for our low-volume email list!

These events are organized by Molly, with assistance from Aaron and Mako.

Publications

Rather than listing publications here, you can check them out on the wiki.