This article is a reposted article from Doug Parry’s article in the UW iSchool News Website. The project is being driven by Stefania Druga who is part of the Community Data Science learning team and Mako. Jason Yip is a group friend.

A decade ago, teaching kids to code might have seemed far-fetched to some, but now coding curriculum is being widely adopted across the country. Recently researchers have turned their eye to the next wave of technology: artificial intelligence. As AI makes a growing impact on our lives, can kids benefit from learning how it works?

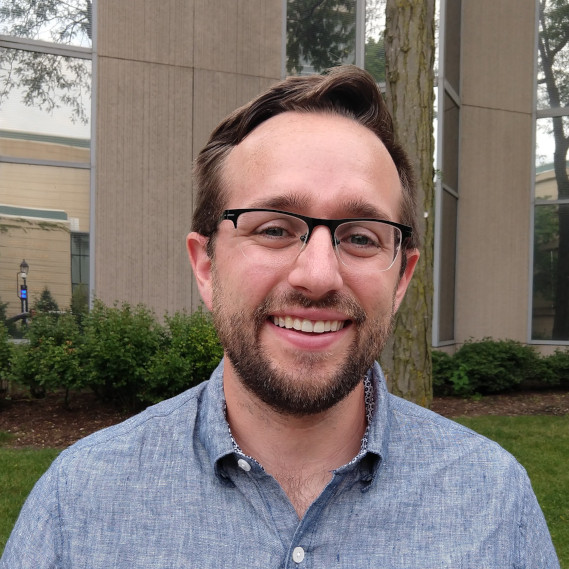

A three-year, $150,000 award from the Jacobs Foundation Research Fellowship Program will help answer that question. The fellowship awarded to Jason Yip, an assistant professor at the University of Washington Information School, will allow a team of researchers to investigate ways to educate kids about AI.

Stefania Druga, a first-year Ph.D. student advised by Yip , is among the researchers spearheading the effort. Druga came to the iSchool after earning her master’s at the Massachusetts Institute of Technology, where she launched Cognimates, a platform that teaches children how to train AI models and interact with them.

Druga’s desire to take Cognimates to the next level brought her to the University of Washington Information School and to her advisor, Yip, whose KidsTeam UW works with children to design technology. KidsTeam treats children as equal partners in the design process, ensuring the technology meets their needs — an approach known as co-design.

At MIT, “I realized there was only so far we could go,” Druga said. “In order for us to imagine what the future interfaces of AI learning for kids would look like, we need to have this longer-term relationship and partnership with kids, and co-design with kids, which is something Jason and the team here have done very well.”

Built on the widely used Scratch programming language, Cognimates is an open-source platform that gives kids the tools to teach computers how to recognize images and text and play games. Druga hopes the next iteration will help children truly understand the concepts behind AI — what is the robot “thinking” and who taught it to think that way? Even if they don’t grow up to be programmers or software engineers, the generation of “AI natives” will need to understand how technology works in order to be critical users.

“It matters as a new literacy,” Druga said, “especially for new generations who are growing up with technologies that become so embedded in things we use on a regular basis.”

Over the course of the fellowship, the research team will work with international partners to develop an AI literacy educational platform and curriculum in multiple languages for use in different settings, in both more- and less-developed parts of the world.

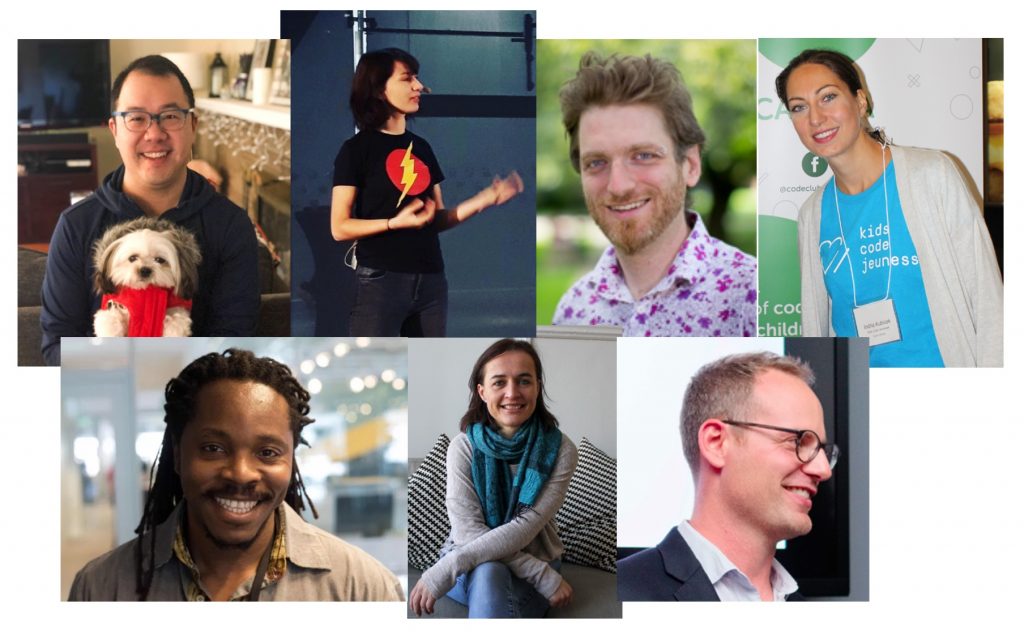

Partners include Kate Arthur, CEO of Kids Code Jeunesse in Montreal; Michael Preston, executive director of the Joan Ganz Cooney Center at Sesame Workshop; David Sengeh, the minister of basic and secondary education for the government of Sierra Leone; and Benjamin Mako Hill, an assistant professor in the UW Department of Communication.

For Yip, the project brings the work of his Ph.D. student together with his work with KidsTeam with other recent research he has conducted on how families interact with AI.

“For me, it’s a proud moment when an advisee has a really cool vision that we can build together as a team,” Yip said. “This is a nice intersection of all of us coming together and thinking about what families need to understand artificial intelligence.”

The Jacobs Foundation fellowship program is open to early- and mid-career researchers from all scholarly disciplines around the world whose work contributes to the development and living conditions of children and youth. It’s highly competitive, with 10-15 fellowships chosen from hundreds of submissions each year.

If you are interested to get involved with this project or support in any way you may contact us at cognimates[a]gmail.com.

Further information about this project available here: http://cognimates.me/research