The International Communication Association (ICA)’s 73nd annual conference is coming up soon. This year, the conference takes place in Toronto, Canada, and a subset of our collective is showing up to present work in person. We are looking forward to meeting up, talking about research, and hanging out together!

ICA takes place from Wednesday, May 24, to Monday, May 29, and CDSC members will take various roles in a number of different conference programs, including chairing, presentations, and co-organizing of preconference. Here is the list of our participation by the time order, so feel free to join us!

Thursday, May 25

We start off with a presentation by Yibin Fan on Thursday at 10:45 am in the International Living Learning Centre of Toronto Metropolitan University on Political Communication Graduate Student Preconference. In a panel on The Causes and Outcomes of Political Polarization and Violence, Yibin will present a paper entitled “Does Incidental Political Discussion Make Political Expression Less Polarized? Evidence From Online Communities”.

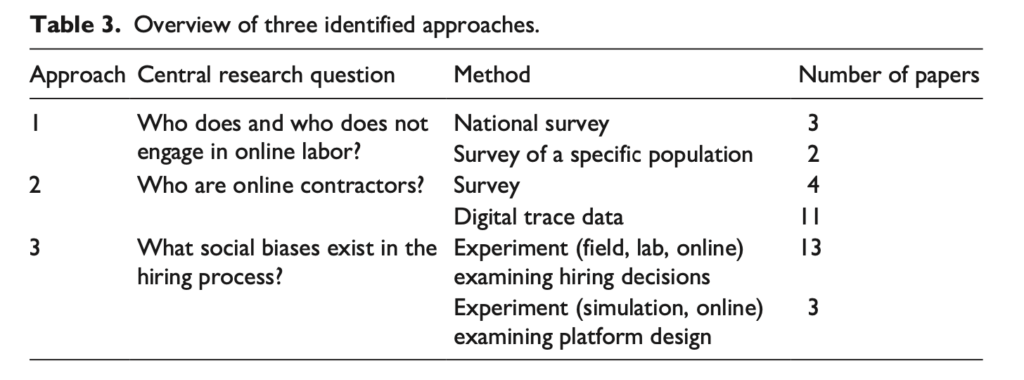

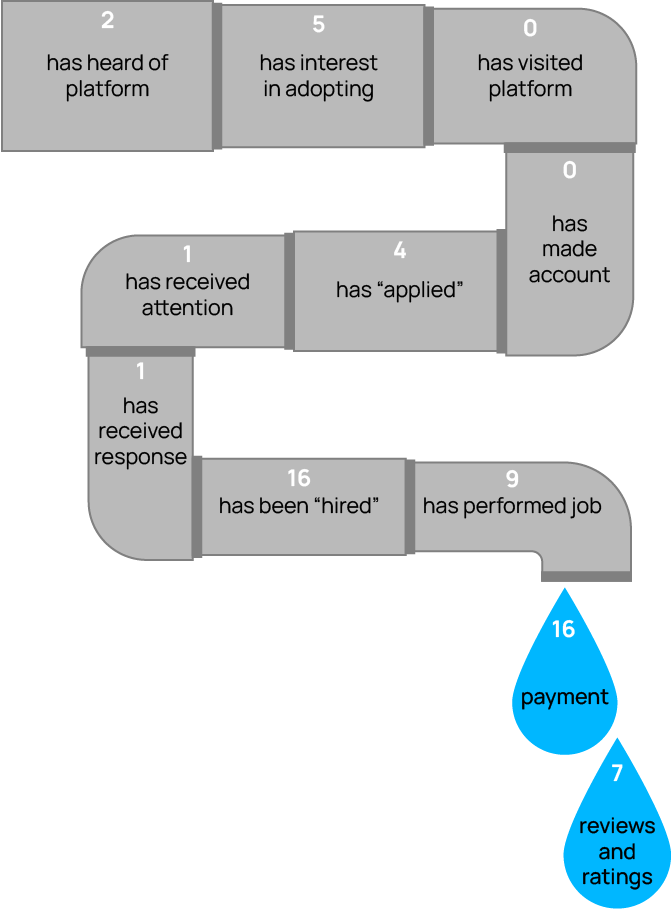

Later on Thursday, another preconference on New Frontiers in Global Digital Inequalities Research will take place in M – Room Linden (Sheraton) from 1:30pm to 5pm, Floor Fiers will work as a co-chair with a number of scholars from international, various academic institutions, and they will give a presentation on “The Gig Economy: A Site of Opportunity Vs. a Site of Risk?”. This preconference is affiliated with Communication and Technology Division and Communication Law & Policy Division of ICA.

Friday, May 26

On Friday, Carl Colglazier will present in a panel on Disinformation, Politics and Social Media at 3:00pm in M – Room York (Sheraton), and the presentation is entitled as “The Effects of Sanctions on Decentralized Social Networking Sites: Quasi-Experimental Evidence From the Fediverse”.

Sunday, May 28

On Sunday we will be actively taking different roles in various sessions. In the morning, Yibin Fan will serve as the moderator for a research paper panel on Political Deliberation and Expression affiliated by Political Communication Division at 9:00 am in 2 – Room Simcoe (Sheraton).

Then there comes our highlighting paper that won the Top Paper Award by Computational Methods Division: Nathan TeBlunthuis will present a methodological research paper entitled as “Automated Content Misclassification Causes Bias in Regression: Can We Fix It? Yes We Can!” in a panel on Debate, Deliberation and Discussion in the Public Sphere at noon in M – Room Maple East (Sheraton). This is a project on which Nate collaborates with Valerie Hase at LMU Munich and Chung-hong Chan at University of Mannheim. Congratulations to them for getting the Top Paper Award!

Last but not least, we will finish off our ICA 2023 by seeing our faculty members serving as the chair and discussants in the Computational Methods Research Escalator Session at 1:30pm in M – Room Maple West (the same room as Nate’s presentation!). The session is junior scholars who are inexperienced in publishing to have connections with more senior researchers in the field. As the Call for Papers by Computational Methods Division says, “Research escalator papers provide an opportunity for less experienced researchers to obtain feedback from more senior scholars about a paper-in-progress, with the goal of making the paper ready for submission to a conference or journal.” Aaron Shaw, together with Matthew Weber at Rutgers University, will serve as the chairs for the session. Benjamin Mako Hill and Jeremy Foote, together with a bunch of scholars from other institutions, will work as discussants for improving the research presented here.

We look forward to sharing our research and connecting with you at ICA!