This winter, the Community Data Science Collective launched a Community Dialogues series. These are meetings in which we invite community experts, organizers, and researchers to get together to share their knowledge of community practices and challenges, recent research, and how that research can be applied to support communities. We had our first meeting in February, with presentations from Jeremy Foot and Sohyeon Hwang on small communities and Nate TeBlunthius and Charlie Keine on overlapping communities.

Watch the introduction video starring Aaron Shaw!

“Joining the Community” by Infomastern is marked with CC BY-SA 2.0.

What we covered

Here are some quick summaries of the presentations. After the presentations, we formed small groups to discuss how what we learned related to our own experiences and knowledge of communities.

Finding Success in Small Communities

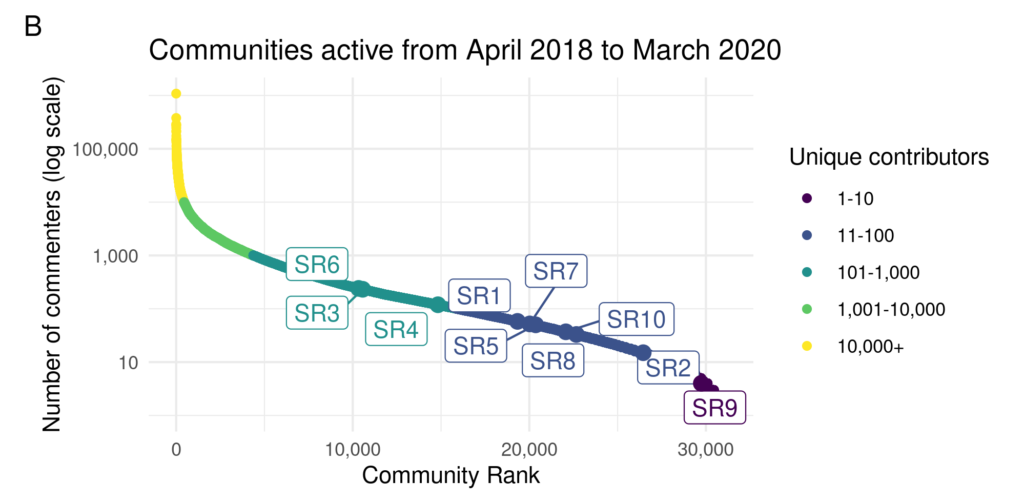

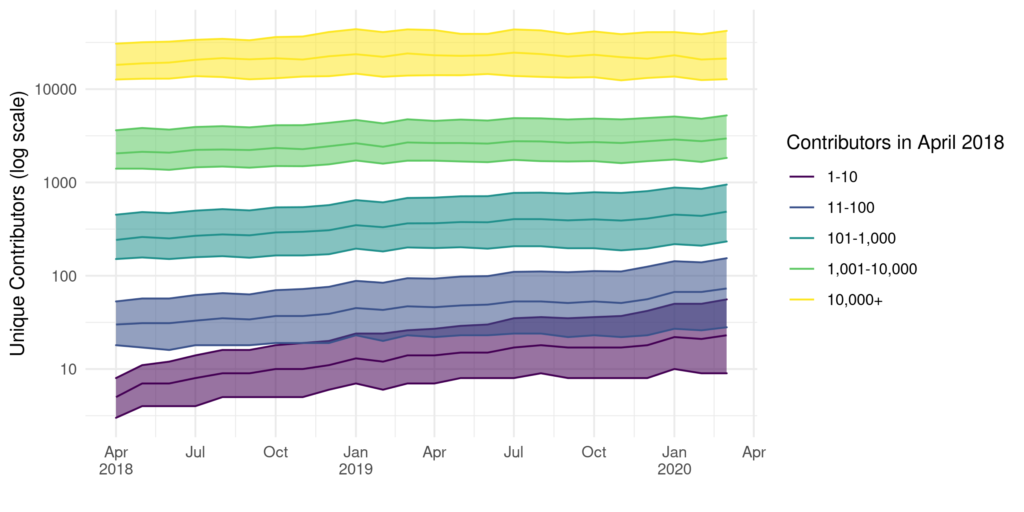

Small communities often stay small, medium stay medium, and big stay big. Meteoric growth is uncommon. User control and content curation improves user experience. Small communities help people define their expectations. Participation in small communities is often very salient and help participants build group identity, but not personal relationships. Growth doesn’t mean success, and we need to move beyond that and solely using quantitative metrics to judge our success. Being small can be a feature, not a bug!

We built a list of discussion questions collaboratively. It included:

- Are you actively trying to attract new members to your community? Why or why not?

- How do you approach scale/size in your community/communities?

- Do you experience pressure to grow? From where? Towards what end?

- What kinds of connections do people seek in the community/communities you are a part of?

- Can you imagine designs/interventions to draw benefits from small communities or sub-communities within larger projects/communities?

- How to understand/set community members’ expectations regarding community size?

- “Small communities promote group identity but not interpersonal relationships.” This seems counterintuitive.

- How do you managing challenges around growth incentives/pressures?

Why People Join Multiple Communities

People join topical clusters of communities, which have more mutualistic relationships than competitive ones. There is a trilemma (like a dilemma) between large audience, specific content, and homophily (likemindness). No community can do everything, and it may be better for participants and communities to have multiple, overlapping spaces. This can be more engaging, generative, fulfilling, and productive. People develop portfolios of communities, which can involve many small communities..

Questions we had for each other:

- Do members of your community also participate in similar communities?

- What other communities are your members most often involved in?

- Are they “competing” with you? Or “mutualistic” in some way?

- In what other ways do they relate to your community?

- There is a “trilemma” between the largest possible audience, specific content, and homophilous (likeminded/similar folks) community. Where does your community sit inside this trilemma?

Slides and videos

How you can get involved

You can subscribe to our mailing list! We’ll be making announcements about future events there. It will be a low volume mailing list.

Acknowledgements

Thanks to speakers Charlie Kiene, Jeremy Foote, Nate TeBlunthius, and Sohyeon Hwang! Kaylea Champion was heavily involved in planning and decision making. The vision for the event borrows from the User and Open Innovation workshops organized by Eric von Hippel and colleagues, as well as others. This event and the research presented in it were supported by multiple awards from the National Science Foundation (DGE-1842165; IIS-2045055; IIS-1908850; IIS-1910202), Northwestern University, the University of Washington, and Purdue University.

Session summaries and questions above were created collaboratively by event attendees.