More than a billion people visit Wikipedia each month and millions have contributed as volunteers. Although Wikipedia exists in 300+ language editions, more than 90% of Wikipedia language editions have fewer than one hundred thousand articles. Many small editions are in languages spoken by small numbers of people, but the relationship between the size of a Wikipedia language edition and that language’s number of speakers—or even the number of viewers of the Wikipedia language editions—varies enormously. Why do some Wikipedias engage more potential contributors than others? We attempted to answer this question in a study of three Indian language Wikipedias that will be published and presented at the ACM Conference on Human Factors in Computing (CHI 2022).

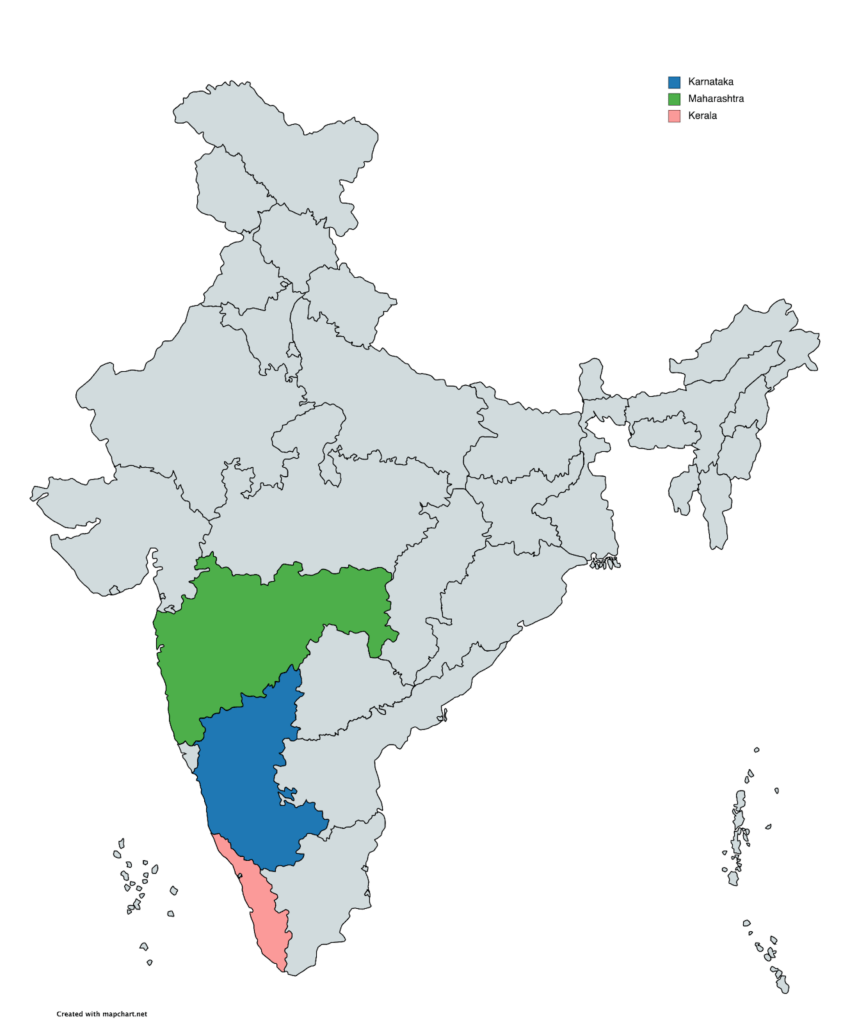

To conduct our study, we selected 3 Wikipedia language communities that correspond to the official languages of 3 neighboring states of India: Marathi (MR) from the state of Maharashtra, Kannada (KN) from the state of Karnataka, and Malayalam (ML) from the state of Kerala (see the map in right panel of the figure above). While the three projects share goals, technological infrastructure, and a similar set of challenges, Malayalam Wikipedia’s community engaged its language speakers in contributing to Wikipedia at a much higher rate than the others. The graph above (left panel) shows that although MR Wikipedia has twice as many viewers as ML Wikipedia, ML has more than double the number of articles on MR.

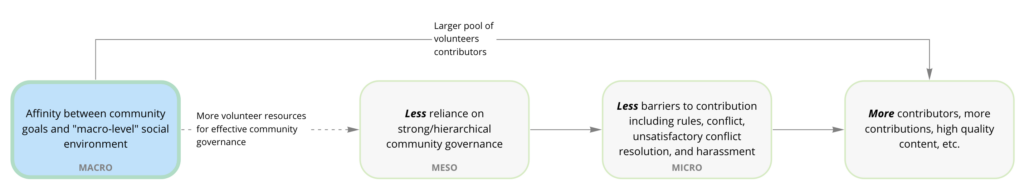

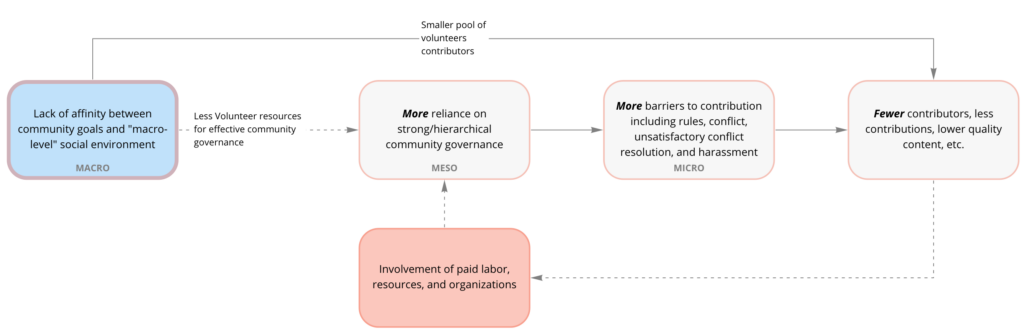

Our study focused on identifying differentiating factors between the three Wikipedias that could explain these differences. Through a grounded theory analysis of interviews with 18 community participants from the three projects, we identified two broad explanations of a “positive participation cycle” in Malayalam Wikipedia and a “negative participation cycle” in Marathi and Kannada Wikipedias.

As the first step of our study, we conducted semistructured interviews with active participants of all three projects to understand their personal experiences and motivation; their perceptions of dynamics, challenges, and goals within their primary language community; and their perceptions of other language Wikipedia.

We found that MR and KN contributors experience more day-to-day barriers to participation than ML, and that these barriers hinder contributors’ day-to-day activity and impede engagement. For example, both MR and KN members reported a large number of content disputes that they felt reduced their desire to contribute.

But why do some Wikipedias like MR or KN have more day-to-day barriers to contribution like content disputes and low social support than others? Our interviews pointed to a series of higher-level explanations. For example, our interviewees reported important differences in the norms and rules used within each community as well as higher levels of territoriality and concentrated power structures in MR and KN.

Once again, though: why do the MR and KN Wikipedias have these issues with territoriality and centralized authority structures? Here we identify a third, even higher-level set of differences in the social and cultural contexts of the three language-speaking communities. For example, MR and KN community members attributed low engagement to broad cultural attitudes toward volunteerism and differences in their language community’s engagement with free software and free culture.

The two flow charts above visualize the explanatory mapping of divergent feedback loops we describe. The top part of the figure illustrates how the relatively supportive macro-level social environment in Kerala led to a larger group of potential contributors to ML as well as a chain reaction of processes that led to a Wikipedia better able to engage potential contributors. The process is an example of a positive feedback cycle. The second, bottom part of the figure shows the parallel, negative feedback cycle that emerged in MR and KN Wikipedias. In these settings, features of the macro-level social environment led to a reliance on a relatively small group of people for community leadership and governance. This led, in turn, to barriers to entry that reduced contributions.

One final difference between the three Wikipedias was the role that paid labor from NGOs played. Because the MR and KN Wikipedias struggled to recruit and engage volunteers, NGOs and foundations deployed financial resources to support the development of content in Marathi and Kannada, but not in ML to the same degree. Our work suggested this tended to further concentrate power among a small group of paid editors in ways that aggravated the meso-level community struggles. This is shown in the red box in the second (bottom) row of the figure.

The results from our study provide a conceptual framework for understanding how the embeddedness of social computing systems within particular social and cultural contexts shape various aspects of the systems. We found that experience with participatory governance and free/open-source software in the Malayalam community supported high engagement of contributors. Counterintuitively, we found that financial resources intended to increase participation in the Marathi and Kannada communities hindered the growth of these communities. Our findings underscore the importance of social and cultural context in the trajectories of peer production communities. These contextual factors help explain patterns of knowledge inequity and engagement on the internet.

Please refer to the preprint of the paper for more details on the study and our design suggestions for localized peer production projects. We’re excited that this paper has been accepted to CHI 2022 and received the Best Paper Honorable Mention Award! It will be published in the Proceedings of the ACM on Human-Computer Interaction and presented at the conference in May. The full citation for this paper is:

Sejal Khatri, Aaron Shaw, Sayamindu Dasgupta, and Benjamin Mako Hill. 2022. The social embeddedness of peer production: A comparative qualitative analysis of three Indian language Wikipedia editions. In CHI Conference on Human Factors in Computing Systems (CHI ’22), April 29-May 5, 2022, New Orleans, LA, USA. ACM, New York, NY, USA, 18 pages. https://doi.org/10.1145/3491102.3501832

If you have any questions about this research, please feel free to reach out to one of the authors: Sejal Khatri, Benjamin Mako Hill, Sayamindu Dasgupta, and Aaron Shaw.

Attending the conference in New Orleans? Come attend our live presentation on May 3 at 3 pm at the CHI program venue, where you can discuss the paper with all the authors.

One Reply to “How social context explains why some online communities engage contributors better than others”