New hires from the rank-and-file arguing with the CEO in public. Employee-chosen projects and a management team reluctant to say no. Few if any written rules. No offices. Staff arriving and departing when they choose. Messes everywhere. Some companies—especially technology firms—describe their ways of working as remaking the model of the modern business. They describe ways of working that were unthinkable some years ago. But has anything really changed from the organization models of the past, or are these features mostly hype and marketing obscuring the same old bureaucracy and hierarchy?

Although not a Communication scholar, sociologist Catherine J. Turco’s work offers vital insight into how communication structures are reordering relationships, with significant implications for the field and discipline of Communication. In this brief and readable ethnographic study, Turco describes ‘TechCo,’ a social media marketing company, in rich detail. TechCo employees have access to the perks and features familiar to those who study firms in Silicon Valley — hack nights, freedom to experiment, flexible schedules, an open floor plan, a “dogs welcome” policy, free beer, and so on. The company seeks to embody its own industry: positioning itself as open, freewheeling, and engaged, just like the social media platforms they help their customers to use. Beyond this external branding, the founders have made an explicit goal internally: to create a company that is intentionally more `open’ and less hierarchical than traditional firms. How is this goal accomplished—and is it indeed accomplished at all?

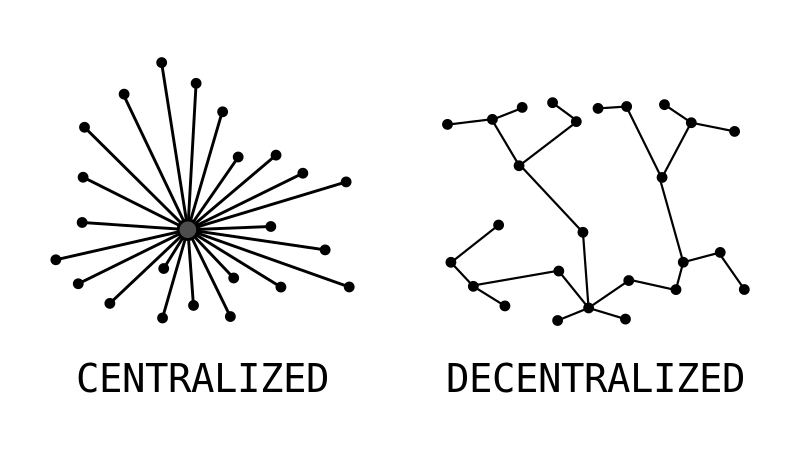

Turco’s answer to this question is that these companies accomplish half of this goal. Companies are indeed able to deliberately open their communication, including the disclosure of financial details that in many firms is held exclusively by C-level leadership, as well as allowing for frank, public feedback from rank and file staff to executive leadership. However, they do so while leaving their hierarchical structure for decisionmaking largely intact. Turco argues that staff are satisfied by this arrangement—and in fact prefer to have decisionmaking power left in the hands of executives.

Drawing from theoretical background stretching from Max Weber to Albert Hirschmann to Sherry Turkle, Turco elaborates a theory of the conversational firm. In the conversational firm, voice and decision making power are intentionally decoupled. Therefore, these two factors can be analyzed distinctly and in tension with one another. This poses a particular challenge to lines of research which treat voice and authority as intertwined or interchangeable.

Communication scholars may find much to reflect on in her careful articulation of what is meant by and accomplished by the idea of “openness” in a firm, from her exploration of how employee use of social media can both benefit and harm a firm, and her case study of how efforts to brand and disseminate company culture can be both a marketing boon and an internal headache.

The book opens with conversations with the founders of TechCo and their desire for “radical openness” (p. 2) and anti-bureaucratic approach to structure. Turco describes the company’s experiences with openness and anti-bureaucratic tendencies from a range of perspectives: as reflected in the experiences of an eager young woman who is new to the workforce, as observed in Hack Nights, as visible within the company’s rollicking wiki discussions about everything from financial information to kitchen cleanup duties, and in their grappling with a lack of strict policies (instead, TechCo asks employees to “Use Good Judgment'”).

Through the first three chapters, Turco asks what this openness means, and finds that although the founders’ goal is to be transparent and less hierarchical than traditional firms, hierarchy remains and is even desired by employees: instead, what’s truly different about TechCo is its embrace of employee perspectives, and the employees’ trust that the firm will take them into account. Through long-running discussions on the company wiki and chat platforms, town hall meetings and cross-departmental dinners, we see frank conversations unfold and influence the direction of the company. Turco also observes that employees seem to primarily seek to be heard—they don’t have, and often don’t want, decision rights: they want and receive voice rights.

Turco concludes that despite the findings of prior work that bureaucracy is largely indestructible and reproduces itself, openness in communication allows greater freedom for employees, at least bending the bars of what Weber called the iron cage. The book returns to the limitations of anti-bureaucratic approaches throughout the text, with a series of examples in Chapter Six navigating the limitations of this openness: how the company came to have a traditional human resources department despite the founders’ repeated public expressions of distate for formal HR and concerns about noise, mess, and distraction in open ‘officeless’ seating plans.

In chapter four, Turco turns attention away from TechCo’s internal dialog and to the relationship between TechCo and external audiences—in particular, the absence of a social media policy. Unlike other firms which have strict rules for how employees comport themselves on social media—and the risk that the company faces from public response to employee behavior and disclosures—here again TechCo emphasizes their “Use Good Judgment” guideline. When employees make mistakes that reflect poorly on the company, TechCo’s response is to treat this as a learning opportunity, turning the event into training materials to shape employee understanding of what good judgment looks like (and doesn’t look like).

Chapter five offers a case study of TechCo’s external communication about their company culture. The founders disseminated a `manifesto’ that combined both their beliefs about TechCo’s culture and their beliefs about how companies should be organized to succeed in the current era. Although the document received extensive positive attention and served as a recruiting tool, existing employees were troubled by gaps between their experience and the company’s description of its culture. Employees also voiced the irony of a document developed in a top-down way describing a participatory and bottom-up culture. Satisfaction plummets. Over time, however, continuing conversation about the document and making revisions to it seems to allow employees to regain their sense of voice, eventually resolving the crisis.

Published in 2016 from fieldwork that ended in 2013, this account does not allow us to see how the conversational firm fared during recent events that have disrupted the structure, functioning, and culture of organizations—e.g. the isolation of Covid-19 pandemic, the migration to remote work, and questions about returning to the office.

In elaborating a theory of how firms can be conversational, decoupling decisionmaking power and voice, the book offers a useful framework for scholars examining the future of work and organizations, as well as other topics of enduring interest in Communication: the shifting relationship between firms and publics and the continued blurring of the public and the private in social media. Of key interest is the extent to which Communication theories about voice, the constitutive power of communication, and factors such as concertive control can be applied to these organizations.

Graduate students with an interest in ethnographic methods will find particular value in the blunt personal narratives that comprise an extended methodological appendix. Turco describes the process of gaining access to the company, gathering observations and interview data, and iteratively analyzing her notes and memos, all of which will be familiar to many. However, this section is unique in offering a series of self-critical reflections on the work of organizational ethnography, explicit description of the personal toll the work exacted from her, and the sometimes painful experience of receiving feedback from her subjects as the analysis emerged.

Ultimately, Turco argues that embracing open communication in firms is a transformative way forward. While we in Communication may agree, what remains for us is to investigate what it means: for how we understand voice in organizations and how we assess the role of technology and platforms for communication.